Why Is 13 Considered an Unlucky Number?

The number 13 has long been considered unlucky in many Western cultures. Even today — in a world far less superstitious than it was in the past — a surprising amount of people have a genuine, deep-rooted fear of the number 13, known as triskaidekaphobia. For this reason, many hotels don’t list the presence of a 13th floor (Otis Elevators reports 85% of its elevator panels omit the number), and many airlines skip row 13. And the more specific yet directly connected fear of Friday the 13th, known as paraskevidekatriaphobia, results in financial losses in excess of $800 million annually in the United States as significant numbers of people avoid traveling, getting married, or even working on the unlucky day.

But why is 13 considered such a harbinger of misfortune? What has led to this particular number being associated with bad luck? While historians and academics aren’t entirely sure of the exact origins of the superstition, there are a handful of historical, religious, and mythological matters that may have combined to create the very real fear surrounding the number 13.

The Code of Hammurabi

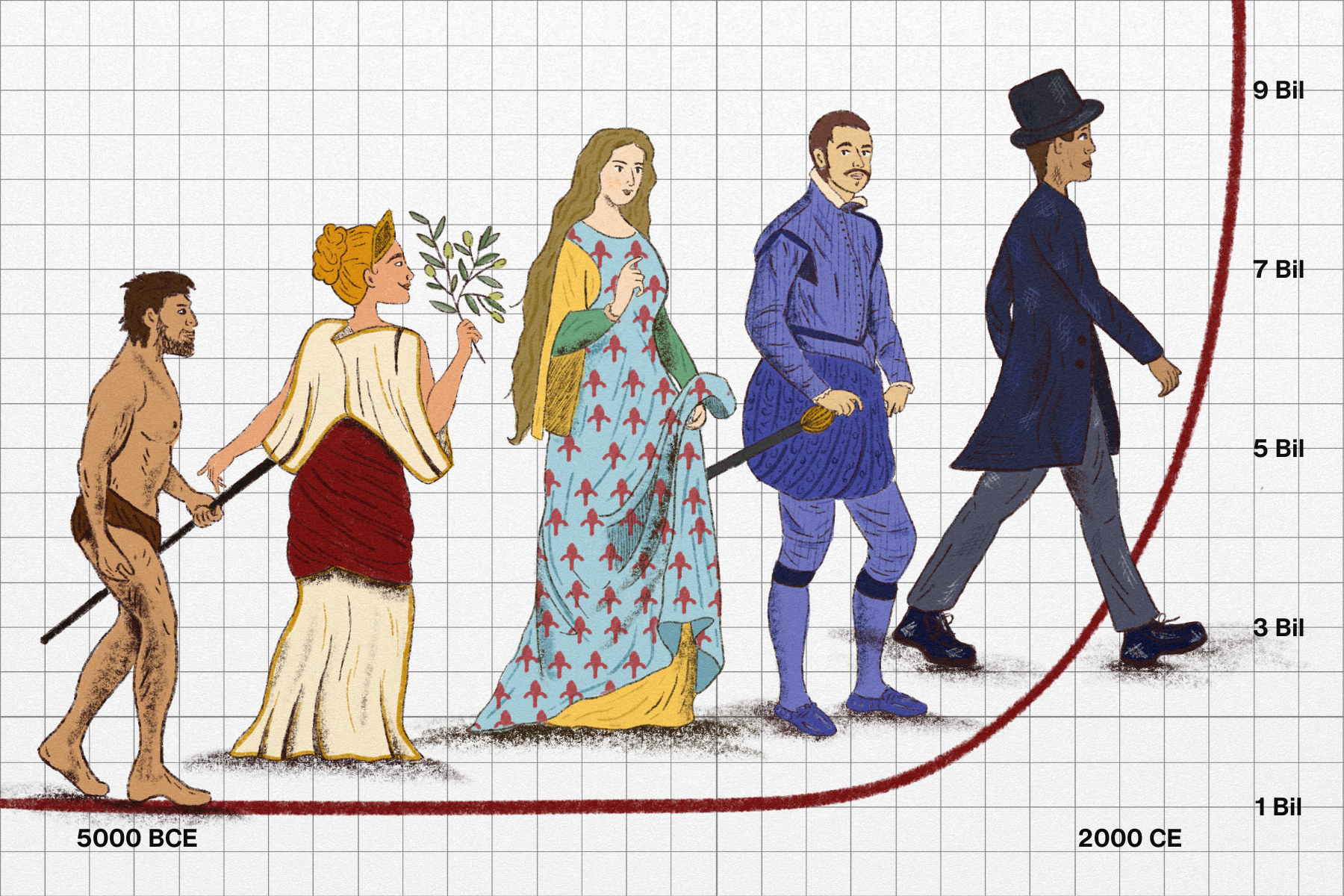

The Code of Hammurabi was one of the earliest and most comprehensive legal codes to be proclaimed and written down. It dates back to the Babylonian King Hammurabi, who reigned from 1792 to 1750 BCE. Carved onto a massive stone pillar, the code set out some 282 rules, including fines and punishments for various misdeeds, but the 13th rule was notably missing. The artifact is often cited as one of the earliest recorded instances of 13 being perceived as unlucky and therefore omitted. Some scholars argue, however, that it was simply a clerical error. Either way, it may well have contributed to the long-standing negative associations surrounding the number 13.