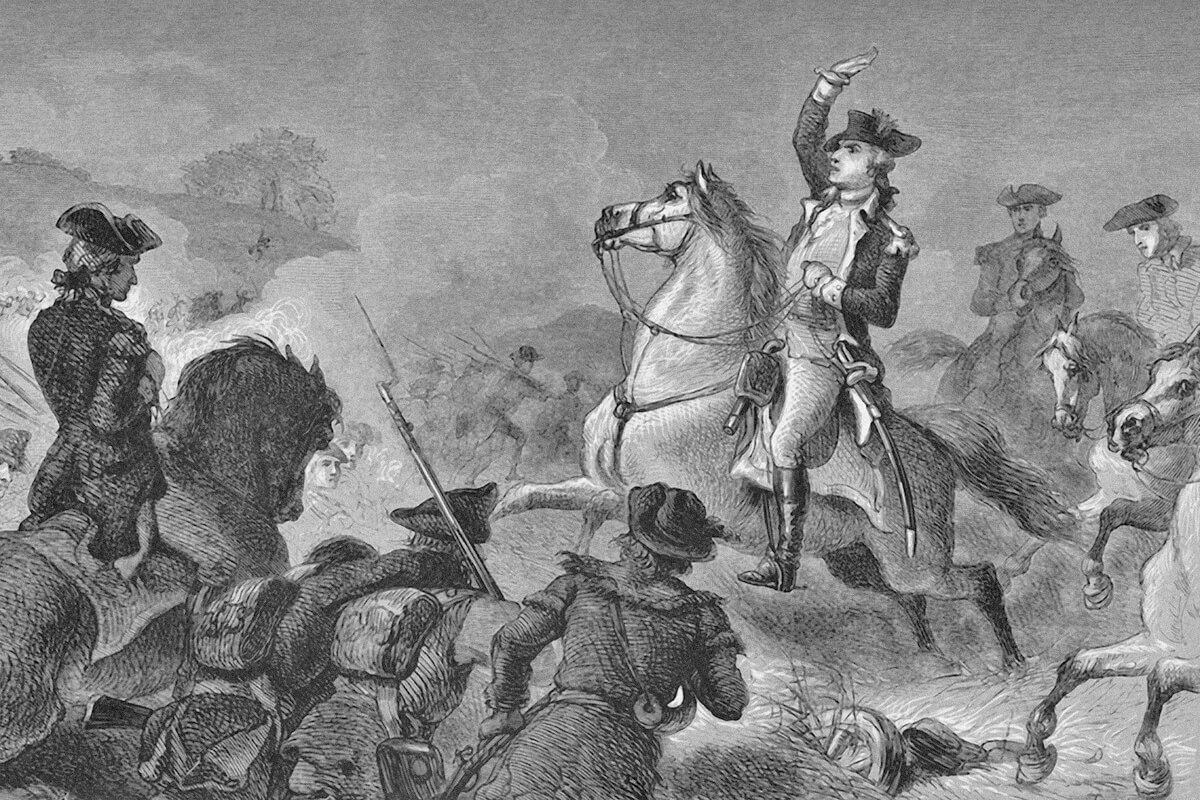

7 Things You Forgot Happened During the Revolutionary War

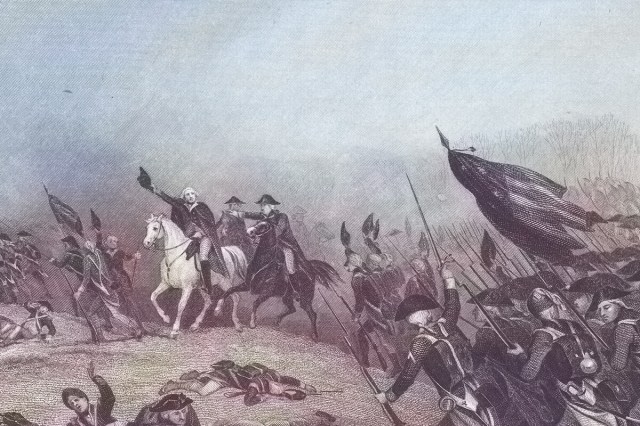

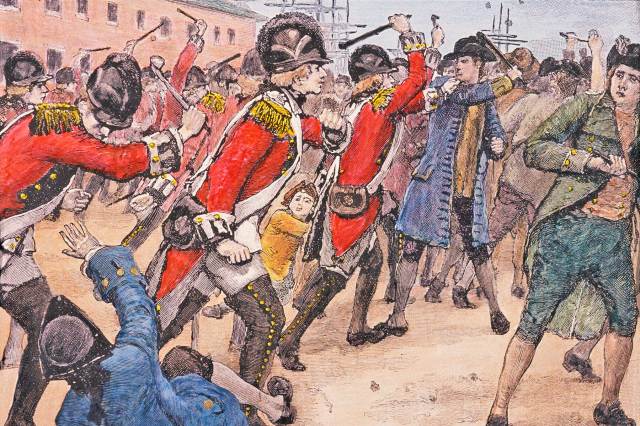

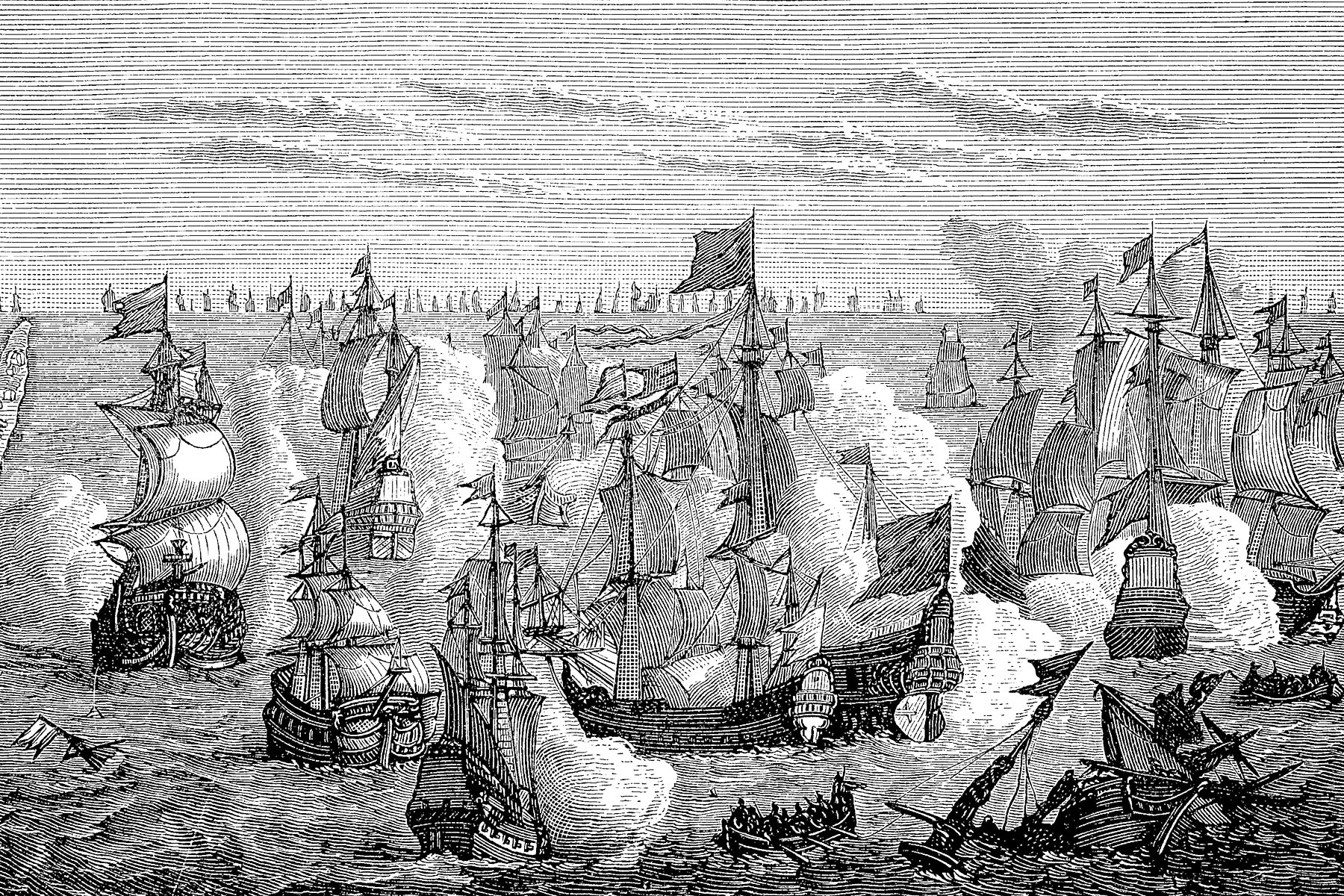

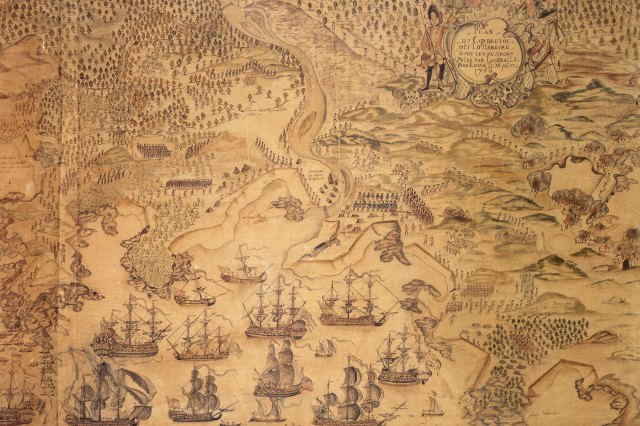

During the American Revolution, 13 British colonies in North America fought for independence from English rule in what became one of the most defining conflicts in history. Certain moments from the Revolutionary War — which spanned from 1775 to 1783 — have certainly been etched into popular memory. But it was a long, complex conflict, and for every renowned tale such as the Boston Tea Party or Washington crossing the Delaware, there are lesser-known events that don’t always make it into textbooks. Here are some of the most fascinating but often overlooked events that unfolded during America’s fight for independence.

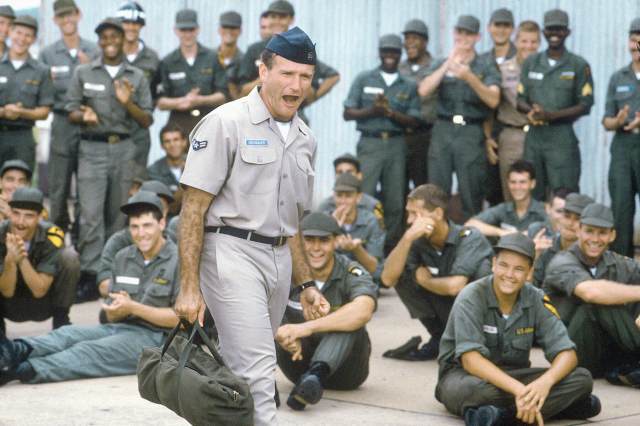

A Woman Disguised Herself as a Man To Fight

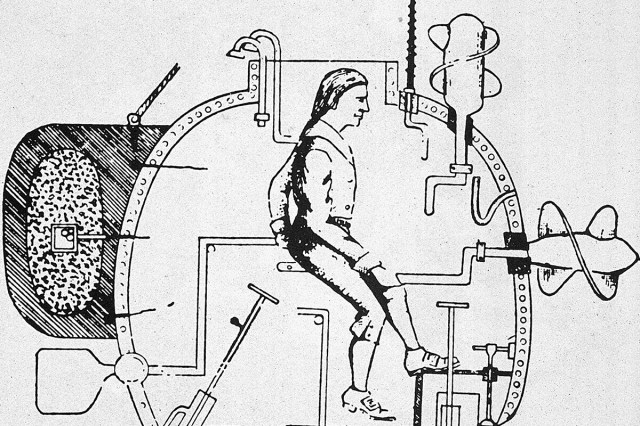

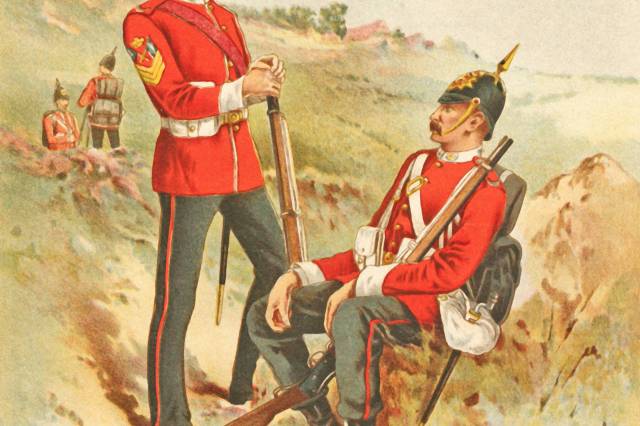

Women weren’t permitted to serve in the military during the Revolutionary War, but they were nonetheless instrumental throughout the conflict — whether they were sewing uniforms, tending to the wounded, or even acting as spies. Some, such as Massachusetts weaver and school teacher Deborah Sampson, took an even more direct approach.

In 1782, Sampson disguised herself as a man and joined the Fourth Massachusetts Regiment under the name Robert Shurtliff. She served in the Continental Army for more than a year, fighting in several battles and even tending to her own wounds — including removing a bullet from her leg. (Another bullet, too difficult to remove, remained in her leg for the rest of her life.)

After serving for a year and a half, she fell ill while in Philadelphia in 1783. She was taken to a hospital, where a high fever caused her to lose consciousness, ultimately leading to the discovery of her true identity. Sampson was honorably discharged in 1783, and, after starting a family, petitioned for back pay and a disability pension for injuries sustained on the battlefield.