Why Is Table Salt Iodized?

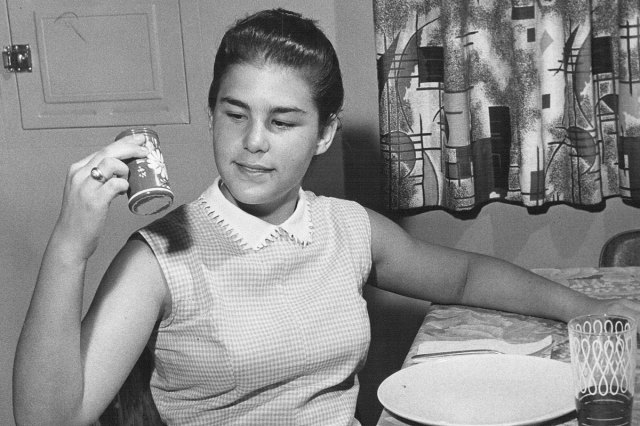

It’s probably sitting in your kitchen cabinet right now: a container of salt, maybe the familiar blue Morton Salt canister with the little girl carrying an umbrella, with the word “iodized” somewhere on the label.

The curious fact that most table salt contains iodine — a trace mineral — is the result of a long chain of historical discoveries. The story begins with seaweed ash and gunpowder, runs through a scientific priority battle, and ends with one of the most effective nutritional interventions ever devised. Adding iodine to salt helped vanquish a problem that had plagued humankind for millennia — and the effects occurred within a single generation.

A Violet Vapor in a Seaweed Vat

The story of iodine starts in 1811, toward the end of the Napoleonic Wars, when French chemist Bernard Courtois was searching for a new way to make saltpeter, or potassium nitrate, a crucial ingredient in gunpowder. France was running out of wood — the traditional source of saltpeter via wood ash — and the government urgently needed alternatives. Seaweed, abundant along the coast of Normandy, seemed promising.

Courtois used sulfuric acid to clean his tanks, and one day, after a particularly strong batch of acid had been applied, he noticed something unusual: a billowing of violet vapor. When the vapor condensed, it left purplish-black crystals that gleamed on the sides of the vats. Courtois had unknowingly isolated a new element.

He reported his discovery in 1813 in the Annales de chimie, in a paper titled “Découverte d’une substance nouvelle dans le vareck” — vareck being the French word for washed-up seaweed. On the second page of that paper, Courtois labeled the new substance iode, the French form of “iodine,” after the Greek word for “violet-colored,” ἰοειδής (ioeidḗs).

Within months, two major scientists — English chemist Humphry Davy and French chemist Joseph-Louis Gay-Lussac — independently studied Courtois’ samples and claimed to have isolated and identified the element. A scientific priority quarrel followed, but a surprisingly polite one. Both men ultimately credited Courtois as the true discoverer.

It was Davy who suggested “iodine” as the English term, aligning with elements such as chlorine (both belong to the same group of elements, the halogens). The element was officially ushered into the chemical pantheon in 1813, and its biological importance became clear almost immediately.