How Did the Romans Represent Zero?

The origins of zero as a mathematical symbol — and concept — are fascinating. As early as 5,000 years ago in ancient Mesopotamia, Sumerian scribes used a slanted double wedge between cuneiform symbols to denote the absence of a number. In the third century BCE, the Babylonians developed a numerical system based on values of 60 and used a symbol of two small wedges to differentiate between tenths, hundredths, and thousandths. Around the fourth century CE, the Maya independently developed their own symbol to represent zero on their calendar. But each of these early systems only recognized the symbolic zero as a placeholder, not as a unique number with its own properties and value.

It was around the fifth century CE that mathematicians in India first formalized the use of zero as both a placeholder and a number with intrinsic value, using a small dot to signify zero. This innovation spread through Islamic scholars, who refined the concept and integrated it into advanced calculations and algebra. It wasn’t until the 12th century that this zero reached Europe, transforming mathematics by making complex calculations possible. Given this was several decades after the fall of the Roman Empire, it begs the question: What did the Romans do without zero?

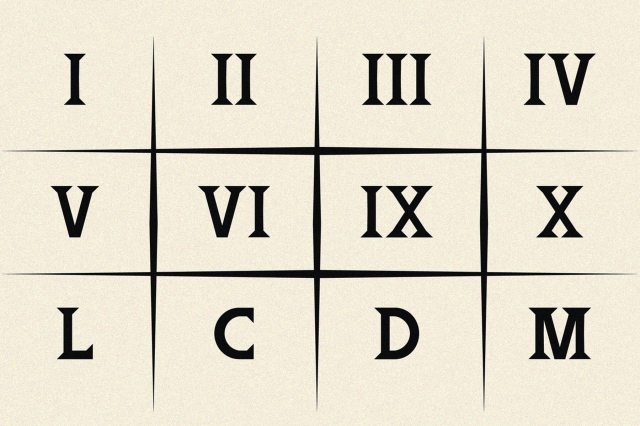

The Roman Numeral System Didn’t Have Zero

In contrast to other ancient cultures, the Romans — whose numerical system was constructed quite differently — had no mathematical symbol for zero. Roman numerals relied on seven specific symbols for values: I (1), V (5), X (10), L (50), C (100), D (500), and M (1,000), which were stacked next to each other to represent all other numbers, usually through addition. For example, XII represents 12 (10 + 1 + 1). Subtraction was used as well, though it wasn’t common until the Middle Ages. This was done by placing a smaller numeral before a larger one; for example, IX represents 9 (10 – 1). Simple arithmetic such as addition and subtraction was done on a counting board known as an abacus, and the value of “none” could be represented on the tool by an empty row.

The Romans did not have a symbol for zero in mathematical computations because they didn’t need it — but they did need a way to denote the absence of a quantity, such as in record-keeping. In these cases, the Latin words nulla or nihil, meaning “none” or “nothing,” served as linguistic placeholders and were abbreviated using N. These words, however, had no mathematical function; they were simply an expression of emptiness rather than part of the formal numerical system. This convention shows that Romans recognized the practical need to denote “nothing” even while they lacked the abstract mathematical understanding of zero as its own number.